IPython/Vision Beam Pattern Demo¶

Note

This page has not been updated to reflect the recent work on ipcluster. This work makes it much easier to use IPython on a cluster.

Installing and testing IPython at OSC systems¶

All components were installed from source and I have my environment set up to include ~/usr/local in my various necessary paths ($PATH, $PYTHONPATH, etc). Other than a slow filesystem for unpacking tarballs, the install went without a hitch. For each needed component, I just downloaded the source tarball, unpacked it via:

tar xzf (or xjf if it's bz2) filename.tar.{gz,bz2}

and then installed them (including IPython itself) with:

cd dirname/ # path to unpacked tarball

python setup.py install --prefix=~/usr/local/

The components I installed are listed below. For each one I give the main project link as well as a direct one to the file I actually dowloaded and used.

- nose, used for testing:

http://somethingaboutorange.com/mrl/projects/nose/ http://somethingaboutorange.com/mrl/projects/nose/nose-0.10.3.tar.gz

- Zope interface, used to declare interfaces in twisted and ipython. Note:

you must get this from the page linked below and not fro the defaul one(http://www.zope.org/Products/ZopeInterface) because the latter has an older version, it hasn’t been updated in a long time. This pypi link has the current release (3.4.1 as of this writing): http://pypi.python.org/pypi/zope.interface http://pypi.python.org/packages/source/z/zope.interface/zope.interface-3.4.1.tar.gz

- pyopenssl, security layer used by foolscap. Note: version 0.7 must be

used: http://sourceforge.net/projects/pyopenssl/ http://downloads.sourceforge.net/pyopenssl/pyOpenSSL-0.6.tar.gz?modtime=1212595285&big_mirror=0

- Twisted, used for all networking:

http://twistedmatrix.com/trac/wiki/Downloads http://tmrc.mit.edu/mirror/twisted/Twisted/8.1/Twisted-8.1.0.tar.bz2

- Foolscap, used for managing connections securely:

http://foolscap.lothar.com/trac http://foolscap.lothar.com/releases/foolscap-0.3.1.tar.gz

- IPython itself:

http://ipython.scipy.org/ http://ipython.scipy.org/dist/ipython-0.9.1.tar.gz

I then ran the ipython test suite via:

iptest -vv

and it passed with only:

======================================================================

ERROR: testGetResult_2

----------------------------------------------------------------------

DirtyReactorAggregateError: Reactor was unclean.

Selectables:

<Negotiation #0 on 10105>

----------------------------------------------------------------------

Ran 419 tests in 33.971s

FAILED (SKIP=4, errors=1)

In three more runs of the test suite I was able to reproduce this error sometimes but not always; for now I think we can move on but we need to investigate further. Especially if we start seeing problems in real use (the test suite stresses the networking layer in particular ways that aren’t necessarily typical of normal use).

Next, I started an 8-engine cluster via:

perez@opt-login01[~]> ipcluster -n 8

Starting controller: Controller PID: 30845

^X Starting engines: Engines PIDs: [30846, 30847, 30848, 30849,

30850, 30851, 30852, 30853]

Log files: /home/perez/.ipython/log/ipcluster-30845-*

Your cluster is up and running.

[... etc]

and in a separate ipython session checked that the cluster is running and I can access all the engines:

In [1]: from IPython.kernel import client

In [2]: mec = client.MultiEngineClient()

In [3]: mec.get_ids()

Out[3]: [0, 1, 2, 3, 4, 5, 6, 7]

and run trivial code in them (after importing the random module in all engines):

In [11]: mec.execute("x=random.randint(0,10)")

Out[11]:

<Results List>

[0] In [3]: x=random.randint(0,10)

[1] In [3]: x=random.randint(0,10)

[2] In [3]: x=random.randint(0,10)

[3] In [3]: x=random.randint(0,10)

[4] In [3]: x=random.randint(0,10)

[5] In [3]: x=random.randint(0,10)

[6] In [3]: x=random.randint(0,10)

[7] In [3]: x=random.randint(0,10)

In [12]: mec.pull('x')

Out[12]: [10, 0, 8, 10, 2, 9, 10, 7]

We’ll continue conducting more complex tests later, including instaling Vision locally and running the beam demo.

Michel’s original instructions¶

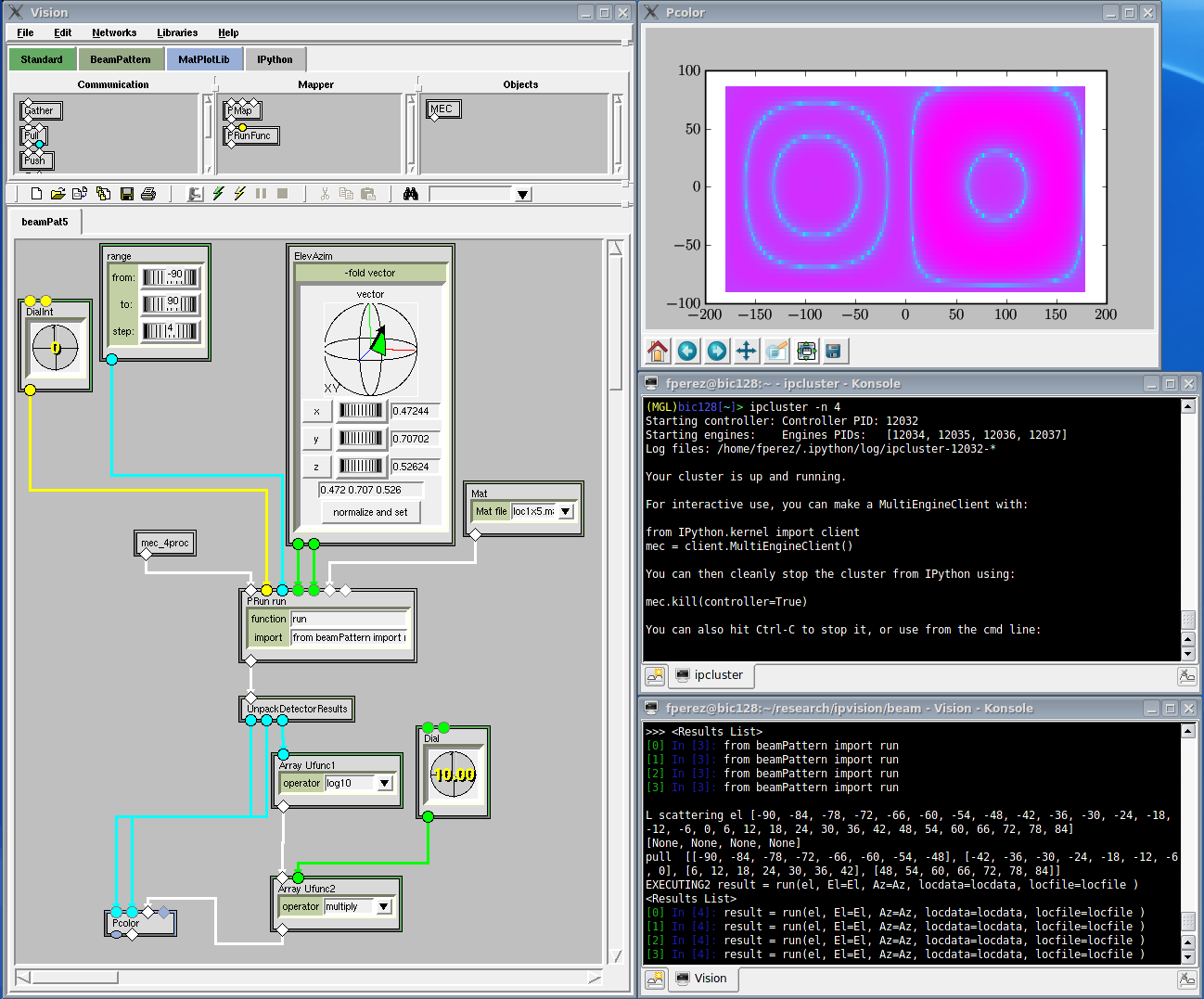

I got a Vision network that reproduces the beam pattern demo working:

I created a package called beamPattern that provides the function run() in its __init__.py file.

A subpackage beamPattern/VisionInterface provides Vision nodes for:

- computing Elevation and Azimuth from a 3D vector

- Reading .mat files

- taking the results gathered from the engines and creating the output that a single engine would have had produced

The Mec node connect to a controller. In my network it was local but an furl can be specified to connect to a remote controller.

The PRun Func node is from the IPython library of nodes. the import statement is used to get the run function from the beamPattern package and bu puting “run” in the function entry of this node we push this function to the engines. In addition to the node will create input ports for all arguments of the function being pushed (i.e. the run function)

The second input port on PRun Fun take an integer specifying the rank of the argument we want to scatter. All other arguments will be pushed to the engines.

The ElevAzim node has a 3D vector widget and computes the El And Az values which are passed into the PRun Fun node through the ports created automatically. The Mat node allows to select the .mat file, reads it and passed the data to the locdata port created automatically on PRun Func

The calculation is executed in parallel, and the results are gathered and output. Instead of having a list of 3 vectors we nd up with a list of n*3 vectors where n is the number of engines. unpackDectorResults will turn it into a list of 3. We then plot x, y, and 10*log10(z)

Installation¶

inflate beamPattern into the site-packages directory for the MGL tools.

place the appended IPythonNodes.py and StandardNodes.py into the Vision package of the MGL tools.

place the appended items.py in the NetworkEditor package of the MGL tools

run vision for the network beamPat5_net.py:

vision beamPat5_net.py

Once the network is running, you can:

- double click on the MEC node and either use an emptty string for the furl to connect to a local engine or cut and paste the furl to the engine you want to use

- click on the yellow lighting bold to run the network.

- Try modifying the MAT file or change the Vector used top compute elevation and Azimut.

Fernando’s notes¶

I had to install IPython and all its dependencies for the python used by the MGL tools.

Then I had to install scipy 0.6.0 for it, since the nodes needed Scipy. To do this I sourced the mglenv.sh script and then ran:

python setup.py install --prefix=~/usr/opt/mgl

Using PBS¶

The following PBS script can be used to start the engines:

#PBS -N bgranger-ipython

#PBS -j oe

#PBS -l walltime=00:10:00

#PBS -l nodes=4:ppn=4

cd $PBS_O_WORKDIR

export PATH=$HOME/usr/local/bin

export PYTHONPATH=$HOME/usr/local/lib/python2.4/site-packages

/usr/local/bin/mpiexec -n 16 ipengine

If this file is called ipython_pbs.sh, then the in one login windows (i.e. on the head-node – opt-login01.osc.edu), run ipcontroller. In another login window on the same node, run the above script:

qsub ipython_pbs.sh

If you look at the first window, you will see some diagnostic output from ipcontroller. You can then get the furl from your own ~/.ipython/security directory and then connect to it remotely.

You might need to set up an SSH tunnel, however; if this doesn’t work as advertised:

ssh -L 10115:localhost:10105 bic