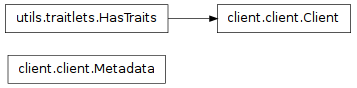

Inheritance diagram for IPython.parallel.client.client:

A semi-synchronous Client for the ZMQ cluster

Authors:

Bases: IPython.utils.traitlets.HasTraits

A semi-synchronous client to the IPython ZMQ cluster

| Parameters : | url_or_file : bytes or unicode; zmq url or path to ipcontroller-client.json

profile : bytes

context : zmq.Context

debug : bool

timeout : int/float

#————– session related args —————- : config : Config object

username : str

packer : str (import_string) or callable

unpacker : str (import_string) or callable

#————– ssh related args —————- : # These are args for configuring the ssh tunnel to be used : # credentials are used to forward connections over ssh to the Controller : # Note that the ip given in `addr` needs to be relative to sshserver : # The most basic case is to leave addr as pointing to localhost (127.0.0.1), : # and set sshserver as the same machine the Controller is on. However, : # the only requirement is that sshserver is able to see the Controller : # (i.e. is within the same trusted network). : sshserver : str

sshkey : str; path to public ssh key file

password : str

paramiko : bool

——- exec authentication args ——- : If even localhost is untrusted, you can have some protection against : unauthorized execution by signing messages with HMAC digests. : Messages are still sent as cleartext, so if someone can snoop your : loopback traffic this will not protect your privacy, but will prevent : unauthorized execution. : exec_key : str

|

|---|---|

| Attributes : | ids : list of int engine IDs

history : list of msg_ids

outstanding : set of msg_ids

results : dict

block : bool

|

| Methods : | spin :

wait :

execution methods :

data movement :

query methods :

control methods :

|

Abort specific jobs from the execution queues of target(s).

This is a mechanism to prevent jobs that have already been submitted from executing.

| Parameters : | jobs : msg_id, list of msg_ids, or AsyncResult

|

|---|

A boolean (True, False) trait.

Get a list of all the names of this classes traits.

This method is just like the trait_names() method, but is unbound.

Get a list of all the traits of this class.

This method is just like the traits() method, but is unbound.

The TraitTypes returned don’t know anything about the values that the various HasTrait’s instances are holding.

This follows the same algorithm as traits does and does not allow for any simple way of specifying merely that a metadata name exists, but has any value. This is because get_metadata returns None if a metadata key doesn’t exist.

Clear the namespace in target(s).

Query the Hub’s TaskRecord database

This will return a list of task record dicts that match query

| Parameters : | query : mongodb query dict

keys : list of strs [optional]

|

|---|

A boolean (True, False) trait.

construct a DirectView object.

If no targets are specified, create a DirectView using all engines.

| Parameters : | targets: list,slice,int,etc. [default: use all engines] :

|

|---|

Retrieve a result by msg_id or history index, wrapped in an AsyncResult object.

If the client already has the results, no request to the Hub will be made.

This is a convenient way to construct AsyncResult objects, which are wrappers that include metadata about execution, and allow for awaiting results that were not submitted by this Client.

It can also be a convenient way to retrieve the metadata associated with blocking execution, since it always retrieves

| Parameters : | indices_or_msg_ids : integer history index, str msg_id, or list of either

block : bool

|

|---|---|

| Returns : | AsyncResult :

AsyncHubResult :

|

Examples

In [10]: r = client.apply()

An instance of a Python list.

Get the Hub’s history

Just like the Client, the Hub has a history, which is a list of msg_ids. This will contain the history of all clients, and, depending on configuration, may contain history across multiple cluster sessions.

Any msg_id returned here is a valid argument to get_result.

| Returns : | msg_ids : list of strs

|

|---|

Always up-to-date ids property.

construct a DirectView object.

If no arguments are specified, create a LoadBalancedView using all engines.

| Parameters : | targets: list,slice,int,etc. [default: use all engines] :

|

|---|

A trait whose value must be an instance of a specified class.

The value can also be an instance of a subclass of the specified class.

Setup a handler to be called when a trait changes.

This is used to setup dynamic notifications of trait changes.

Static handlers can be created by creating methods on a HasTraits subclass with the naming convention ‘_[traitname]_changed’. Thus, to create static handler for the trait ‘a’, create the method _a_changed(self, name, old, new) (fewer arguments can be used, see below).

| Parameters : | handler : callable

name : list, str, None

remove : bool

|

|---|

An instance of a Python set.

A trait for unicode strings.

Tell the Hub to forget results.

Individual results can be purged by msg_id, or the entire history of specific targets can be purged.

Use purge_results(‘all’) to scrub everything from the Hub’s db.

| Parameters : | jobs : str or list of str or AsyncResult objects

targets : int/str/list of ints/strs

|

|---|

Fetch the status of engine queues.

| Parameters : | targets : int/str/list of ints/strs

verbose : bool

|

|---|

Resubmit one or more tasks.

in-flight tasks may not be resubmitted.

| Parameters : | indices_or_msg_ids : integer history index, str msg_id, or list of either

block : bool

|

|---|---|

| Returns : | AsyncHubResult :

|

Check on the status of the result(s) of the apply request with msg_ids.

If status_only is False, then the actual results will be retrieved, else only the status of the results will be checked.

| Parameters : | msg_ids : list of msg_ids

status_only : bool (default: True)

|

|---|---|

| Returns : | results : dict

|

A trait whose value must be an instance of a specified class.

The value can also be an instance of a subclass of the specified class.

construct and send an apply message via a socket.

This is the principal method with which all engine execution is performed by views.

Terminates one or more engine processes, optionally including the hub.

Flush any registration notifications and execution results waiting in the ZMQ queue.

Get metadata values for trait by key.

Get a list of all the names of this classes traits.

Get a list of all the traits of this class.

The TraitTypes returned don’t know anything about the values that the various HasTrait’s instances are holding.

This follows the same algorithm as traits does and does not allow for any simple way of specifying merely that a metadata name exists, but has any value. This is because get_metadata returns None if a metadata key doesn’t exist.

waits on one or more jobs, for up to timeout seconds.

| Parameters : | jobs : int, str, or list of ints and/or strs, or one or more AsyncResult objects

timeout : float

|

|---|---|

| Returns : | True : when all msg_ids are done False : timeout reached, some msg_ids still outstanding |

Bases: dict

Subclass of dict for initializing metadata values.

Attribute access works on keys.

These objects have a strict set of keys - errors will raise if you try to add new keys.

D.clear() -> None. Remove all items from D.

D.copy() -> a shallow copy of D

v defaults to None.

D.get(k[,d]) -> D[k] if k in D, else d. d defaults to None.

D.has_key(k) -> True if D has a key k, else False

D.items() -> list of D’s (key, value) pairs, as 2-tuples

D.iteritems() -> an iterator over the (key, value) items of D

D.iterkeys() -> an iterator over the keys of D

D.itervalues() -> an iterator over the values of D

D.keys() -> list of D’s keys

D.pop(k[,d]) -> v, remove specified key and return the corresponding value. If key is not found, d is returned if given, otherwise KeyError is raised

D.popitem() -> (k, v), remove and return some (key, value) pair as a 2-tuple; but raise KeyError if D is empty.

D.setdefault(k[,d]) -> D.get(k,d), also set D[k]=d if k not in D

D.update(E, **F) -> None. Update D from dict/iterable E and F. If E has a .keys() method, does: for k in E: D[k] = E[k] If E lacks .keys() method, does: for (k, v) in E: D[k] = v In either case, this is followed by: for k in F: D[k] = F[k]

D.values() -> list of D’s values