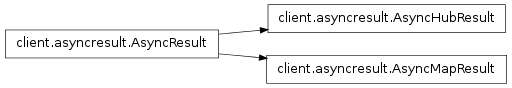

Inheritance diagram for IPython.parallel.client.asyncresult:

AsyncResult objects for the client

Authors:

Bases: IPython.parallel.client.asyncresult.AsyncResult

Class to wrap pending results that must be requested from the Hub.

Note that waiting/polling on these objects requires polling the Hubover the network, so use AsyncHubResult.wait() sparingly.

abort my tasks.

republish the outputs of the computation

| Parameters : | groupby : str [default: type]

|

|---|

elapsed time since initial submission

Return the result when it arrives.

If timeout is not None and the result does not arrive within timeout seconds then TimeoutError is raised. If the remote call raised an exception then that exception will be reraised by get() inside a RemoteError.

Get the results as a dict, keyed by engine_id.

timeout behavior is described in get().

property for accessing execution metadata.

the number of tasks which have been completed at this point.

Fractional progress would be given by 1.0 * ar.progress / len(ar)

result property wrapper for get(timeout=0).

Return whether the call has completed.

result property wrapper for get(timeout=0).

result property as a dict.

check whether my messages have been sent.

serial computation time of a parallel calculation

Computed as the sum of (completed-started) of each task

Return whether the call completed without raising an exception.

Will raise AssertionError if the result is not ready.

compute the difference between two sets of timestamps

The default behavior is to use the earliest of the first and the latest of the second list, but this can be changed by passing a different

| Parameters : | start : one or more datetime objects (e.g. ar.submitted) end : one or more datetime objects (e.g. ar.received) start_key : callable

end_key : callable

|

|---|---|

| Returns : | dt : float

|

wait for result to complete.

wait for pyzmq send to complete.

This is necessary when sending arrays that you intend to edit in-place. timeout is in seconds, and will raise TimeoutError if it is reached before the send completes.

interactive wait, printing progress at regular intervals

actual computation time of a parallel calculation

Computed as the time between the latest received stamp and the earliest submitted.

Only reliable if Client was spinning/waiting when the task finished, because the received timestamp is created when a result is pulled off of the zmq queue, which happens as a result of client.spin().

For similar comparison of other timestamp pairs, check out AsyncResult.timedelta.

Bases: IPython.parallel.client.asyncresult.AsyncResult

Class for representing results of non-blocking gathers.

This will properly reconstruct the gather.

This class is iterable at any time, and will wait on results as they come.

If ordered=False, then the first results to arrive will come first, otherwise results will be yielded in the order they were submitted.

abort my tasks.

republish the outputs of the computation

| Parameters : | groupby : str [default: type]

|

|---|

elapsed time since initial submission

Return the result when it arrives.

If timeout is not None and the result does not arrive within timeout seconds then TimeoutError is raised. If the remote call raised an exception then that exception will be reraised by get() inside a RemoteError.

Get the results as a dict, keyed by engine_id.

timeout behavior is described in get().

property for accessing execution metadata.

the number of tasks which have been completed at this point.

Fractional progress would be given by 1.0 * ar.progress / len(ar)

result property wrapper for get(timeout=0).

Return whether the call has completed.

result property wrapper for get(timeout=0).

result property as a dict.

check whether my messages have been sent.

serial computation time of a parallel calculation

Computed as the sum of (completed-started) of each task

Return whether the call completed without raising an exception.

Will raise AssertionError if the result is not ready.

compute the difference between two sets of timestamps

The default behavior is to use the earliest of the first and the latest of the second list, but this can be changed by passing a different

| Parameters : | start : one or more datetime objects (e.g. ar.submitted) end : one or more datetime objects (e.g. ar.received) start_key : callable

end_key : callable

|

|---|---|

| Returns : | dt : float

|

Wait until the result is available or until timeout seconds pass.

This method always returns None.

wait for pyzmq send to complete.

This is necessary when sending arrays that you intend to edit in-place. timeout is in seconds, and will raise TimeoutError if it is reached before the send completes.

interactive wait, printing progress at regular intervals

actual computation time of a parallel calculation

Computed as the time between the latest received stamp and the earliest submitted.

Only reliable if Client was spinning/waiting when the task finished, because the received timestamp is created when a result is pulled off of the zmq queue, which happens as a result of client.spin().

For similar comparison of other timestamp pairs, check out AsyncResult.timedelta.

Bases: object

Class for representing results of non-blocking calls.

Provides the same interface as multiprocessing.pool.AsyncResult.

abort my tasks.

republish the outputs of the computation

| Parameters : | groupby : str [default: type]

|

|---|

elapsed time since initial submission

Return the result when it arrives.

If timeout is not None and the result does not arrive within timeout seconds then TimeoutError is raised. If the remote call raised an exception then that exception will be reraised by get() inside a RemoteError.

Get the results as a dict, keyed by engine_id.

timeout behavior is described in get().

property for accessing execution metadata.

the number of tasks which have been completed at this point.

Fractional progress would be given by 1.0 * ar.progress / len(ar)

result property wrapper for get(timeout=0).

Return whether the call has completed.

result property wrapper for get(timeout=0).

result property as a dict.

check whether my messages have been sent.

serial computation time of a parallel calculation

Computed as the sum of (completed-started) of each task

Return whether the call completed without raising an exception.

Will raise AssertionError if the result is not ready.

compute the difference between two sets of timestamps

The default behavior is to use the earliest of the first and the latest of the second list, but this can be changed by passing a different

| Parameters : | start : one or more datetime objects (e.g. ar.submitted) end : one or more datetime objects (e.g. ar.received) start_key : callable

end_key : callable

|

|---|---|

| Returns : | dt : float

|

Wait until the result is available or until timeout seconds pass.

This method always returns None.

wait for pyzmq send to complete.

This is necessary when sending arrays that you intend to edit in-place. timeout is in seconds, and will raise TimeoutError if it is reached before the send completes.

interactive wait, printing progress at regular intervals

actual computation time of a parallel calculation

Computed as the time between the latest received stamp and the earliest submitted.

Only reliable if Client was spinning/waiting when the task finished, because the received timestamp is created when a result is pulled off of the zmq queue, which happens as a result of client.spin().

For similar comparison of other timestamp pairs, check out AsyncResult.timedelta.